Image Source : https://github.com/ItzCrazyKns/Perplexica

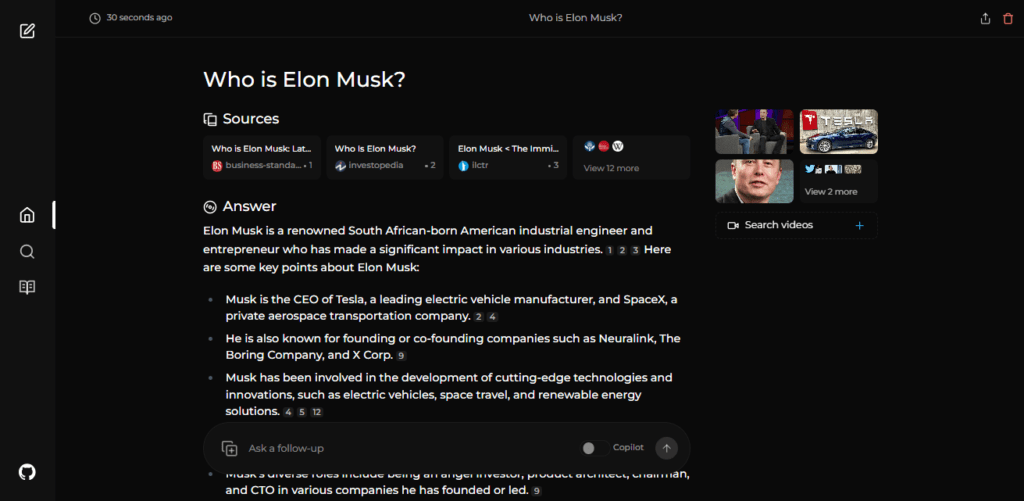

Perplexica is an AI-powered Search Engine that explores the depths of the internet to find solutions. Drawing inspiration from Perplexity AI, this open-source tool not only searches the web but also understands your queries. By employing advanced machine learning algorithms like similarity searching and embeddings, it refines search results and provides clear answers with cited sources/

Features Of Perplexia

- Local LLMs

- Copilot Mode

- Normal Mode

- Focus Mode

- All Mode

- Writing Assistant Mode

- Academic Search Mode

- Youtube Search Mode

- Wolfram Alpha Search Mode

- Reddit Search Modes.

It has many more features like image and video search. Some of the planned features are mentioned in upcoming features.

Installation

There are mainly 2 ways of installing Perplexica – With Docker, Without Docker. Using Docker is highly recommended.

Getting Started with Docker (Recommended)

- Ensure Docker is installed and running on your system.

- Clone the Perplexica repository:git clone https://github.com/ItzCrazyKns/Perplexica.git

- After cloning, navigate to the directory containing the project files.

- Rename the

sample.config.tomlfile toconfig.toml. For Docker setups, you need only fill in the following fields:CHAT_MODEL: The name of the LLM to use. Likellama3:latest(using Ollama),gpt-3.5-turbo(using OpenAI), etc.CHAT_MODEL_PROVIDER: The chat model provider, eitheropenaiorollama. Depending upon which provider you use you would have to fill in the following fields:OPENAI: Your OpenAI API key. You only need to fill this if you wish to use OpenAI’s models.OLLAMA: Your Ollama API URL. You should enter it ashttp://host.docker.internal:PORT_NUMBER. If you installed Ollama on port 11434, usehttp://host.docker.internal:11434. For other ports, adjust accordingly. You need to fill this if you wish to use Ollama’s models instead of OpenAI’s.Note: You can change these and use different models after running Perplexica as well from the settings page.

SIMILARITY_MEASURE: The similarity measure to use (This is filled by default; you can leave it as is if you are unsure about it.)

- Ensure you are in the directory containing the

docker-compose.yamlfile and execute:docker compose up -d - Wait a few minutes for the setup to complete. You can access Perplexica at http://localhost:3000 in your web browser.

Note: After the containers are built, you can start Perplexica directly from Docker without having to open a terminal.

Non-Docker Installation

- Clone the repository and rename the

sample.config.tomlfile toconfig.tomlin the root directory. Ensure you complete all required fields in this file. - Rename the

.env.examplefile to.envin theuifolder and fill in all necessary fields. - After populating the configuration and environment files, run

npm iin both theuifolder and the root directory. - Install the dependencies and then execute

npm run buildin both theuifolder and the root directory. - Finally, start both the frontend and the backend by running

npm run startin both theuifolder and the root directory.

Note: Using Docker is recommended as it simplifies the setup process, especially for managing environment variables and dependencies.

FROM GITHUB:

Thank you for exploring Perplexica, the AI-powered search engine designed to enhance your search experience. We are constantly working to improve Perplexica and expand its capabilities. We value your feedback and contributions which help us make Perplexica even better. Don’t forget to check back for updates and new features!

GITHUB REPOSITORY

https://github.com/ItzCrazyKns/Perplexica

All Rights Reserved To The Respective Owners.